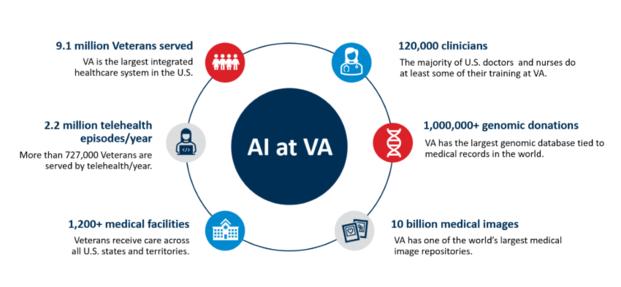

The Department of Veterans Affairs (VA) has increased its reliance on artificial intelligence to enhance services for veterans, including the anticipation of crisis situations and streamlining operations.

Although the use of AI is not a new concept at the VA, lawmakers and the Government Accountability Office (GAO) have raised concerns over privacy and proper staffing to match the increasing reliance on AI.

"Veterans deserve proven, safe care–not services driven by experimental AI tools that are deployed without oversight,” said Sydney Saubestre, senior policy analyst at New America’s Open Technology Institute, in response to heightened AI use at the VA.

Between 2023 and 2024, AI use cases increased from 40 to 229, and generative AI applications increased from one to 27, according to a Sept. 15 GAO report regarding AI practice in the VA. These AI uses helped support automating medical imaging, summarizing patient records, and supporting efficient decision-making.

This trend reflects a larger shift across government agencies, according to Quinn Anex-Ries, a senior policy analyst at the Center for Democracy and Technology.

“At the end of 2024, in late December and early January (2025), all federal agencies published updates to their AI use case inventories … We saw a 200% increase in reported use cases,” Anex-Ries said.

Within the VA, Saubestre highlighted the long history of healthcare innovation, including pacemakers and liver transplants, to evidence-based PTSD treatment—breakthroughs which were piloted at the VA.

“Evidence-based improvements are critical to ensuring veterans receive the care they need,” Saubestre said.

But she also recognized the challenges posed by AI innovation and impacts within the VA.

“While AI has the potential to improve veterans’ healthcare, that is far from a foregone conclusion,” she said. “These technologies must undergo clear, transparent, and ethically grounded testing to ensure that adequate safeguards are in place and that there are no disparate impacts.”

Anex-Ries had a similar observation in AI use for both benefits and health within the VA, explaining that harms could range from wasted taxpayer dollars and decreased efficiency within an agency to false accusations of fraud against a beneficiary or incorrect denial of claims, preventing people from accessing healthcare or benefits that they rightly qualify for.

“The stakes are really high,” he said.

The Safety of Veterans’ Data: ‘Among the Most Sensitive Information’

A GAO report published in September 2025 titled, “Veterans affairs: Key AI practices could help address challenges” noted major challenges that the VA faces with AI use, including complying with federal policies, having sufficient technology and budgeting, and developing a workforce proficient in AI.

This report was led by Carol Harris, GAO director for information technology and cybersecurity, and used in testimony requested by the House Veterans’ Affairs Subcommittee on Technology Modernization.

Most importantly, data security has remained a top concern to ensure veterans’ health information and privacy are not breached, which the GAO found that the VA’s current practices do not best incorporate risk management.

Also, it was discovered that the previous 26 recommendations made to the VA had not been implemented, nor was the AI inventory updated either, which raised concern about the VA’s management of its IT resources.

Darrell West, senior fellow for the Center for Technology Innovation at the Brookings Institute, spoke broadly of the need for adherence across the entire healthcare industry.

“Privacy is a huge concern for patients, especially in the healthcare area, just because medical information is so sensitive. The United States does not have a national privacy law, which is a big problem,” West said. “In the absence of that, it's important for various agencies to maintain the highest degree of privacy and security for veterans’ data because nobody wants their personal information to get out.”

Saubestre said that with federal guardrails being stripped back for the government’s use of AI and data, the rapid push to scale these technologies increases the risk that veterans’ privacy and security will not be adequately protected.

“Veterans’ health data is among the most sensitive information the government holds, and AI use in this space demands stronger protections–not fewer,” Saubestre said.

While AI technology has the potential to improve operation efficiency for veteran care—the department has created an AI use case inventory, for example—there is still limited oversight awareness on the full scope of its capabilities, warn experts in documents like the GAO report.

In July 2023, the VA created a “Trustworthy AI Framework” focused on the principles of “purposeful, effective and safe, secure and private, fair and equitable, transparent and explainable, and accountable and monitored,” according to the VA website.

In January 2025, aligned with President Donald Trump’s executive order “Removing Barriers to American Leadership in Artificial Intelligence,” the VA proceeded with further investments in AI solutions to “support the delivery of world-class benefits and services to veterans.”

Concerns on the Hill

Lawmakers on Capitol Hill addressed the importance of proper AI protocols given its increased use at the VA.

The House Veterans’ Affairs Committee has discussed that while the VA is advancing AI tools, basic IT infrastructure still struggles, leaving questions about whether new technology can be effectively implemented.

“VA is still working with the crumbling IT infrastructure and still grapples to modernize systems and workflows,” said Democratic Rep. Nikki Budzinski of Illinois during a House Veterans’ Affairs Subcommittee on Technology Modernization Oversight hearing on Sept. 15, 2025.

“Since the last hearing, the department has been entangled in multiple cybersecurity incidents, which have potentially placed veterans’ data at risk. Though many of these breaches have been targeted at VA contractors, veterans’ data has still been implicated, and VA maintains some responsibility for its safety,” Budzinski continued.

Anex-Ries explained that investment in AI includes ensuring these types of breaches cannot occur, which means adequate staffing for risk assessments and monitoring.

“It’s really critical to make sure that you actually have those budgetary, staffing, and resource considerations accounted for before you acquire a tool,” he said.

During the Sept. 15 hearing, Budzinski emphasized the urgency to fill the chief information role promptly due to its added importance on the subject.

Eddie Pool is currently the acting VA chief information officer (CIO), a position that oversees the technology and cybersecurity programs within the VA, which has a budget of over $7 billion and 16,000 employees.

Former CIO Kurt DelBene left the position in January. Ryan Cote was nominated to take over by July, but that was withdrawn, perhaps showing the position’s volatility.

“This position is particularly critical as we see the acceleration and progression of modernization efforts at the department,” Budzinski said. “It seems the VA still lacks a coherent enterprise IT strategy, leaving projects like AI integration to happen in silos.”

Charles Worthington currently fills the role of both chief technology officer and chief artificial intelligence officer.

During the aforementioned hearing, he said the “VA’s strategic vision is to make the department an industry leader in AI that improves veterans’ lives by delivering faster, higher quality, and more cost-efficient services, with strong governance and trust.”

AI Tools at the VA

Worthington noted that a tool called VA GPT, with over 85,000 employee users, saves an average of 2.5 hours per week, and 80% of respondents agreed it helps efficiency.

Simultaneously, he did not clarify how this supposed efficiency and time saved assisted with other services within the VA, such as increase in in-person care.

Another highly popular tool called GitHub Copilot had over 2,000 users, saving eight hours per week on work. Worthington claimed this tool is “making it easier to refill prescriptions and apply for benefits, and the improvement of backend systems that accelerate claims processing.”

The Stratification Tool for Opioid Risk Mitigation, also known as STORM, is used to identify and mitigate overdose and suicide risk within veterans prescribed opioids or with opioid use disorder.

“Healthcare teams reviewed the care of over 28,700 veterans identified by STORM in the past year alone, decreasing mortality in high-risk patients by 22%,” Worthington said.

Among the many AI tools being utilized, Saubestre warned that quick innovation should not cause concerns surrounding privacy to be forgotten.

“Contestability and transparency are critical: These technologies must be subject to enforceable privacy standards, rigorous oversight, and ongoing accountability,” she said.

Another challenge is ensuring accessibility to AI innovation across the United States. According to West, that means addressing regional inequities in certain pockets of the country where technological capabilities are not equivalent.

He warns that this could lead to certain areas and citizens being left behind in the efficiencies that AI provides.

“The AI applications are getting better, so I think it's helpful that the technology is being more widely embraced,” West said. “But we have to make sure that we can maintain privacy and ensure that everybody has similar access to the technology.”

Anex-Ries warned that algorithmic bias could compound existing disparities, citing research surrounding algorithmic bias and discrimination within certain AI tools.

“With regional VA offices, it’s not just about whether headquarters has the right resources,” he said. “You have to ask whether local offices have the staffing and budget to use these tools appropriately.

“If they don’t, it’s likely those offices aren’t ready to adopt an AI tool.”